So how does AI actually translate?

Theory

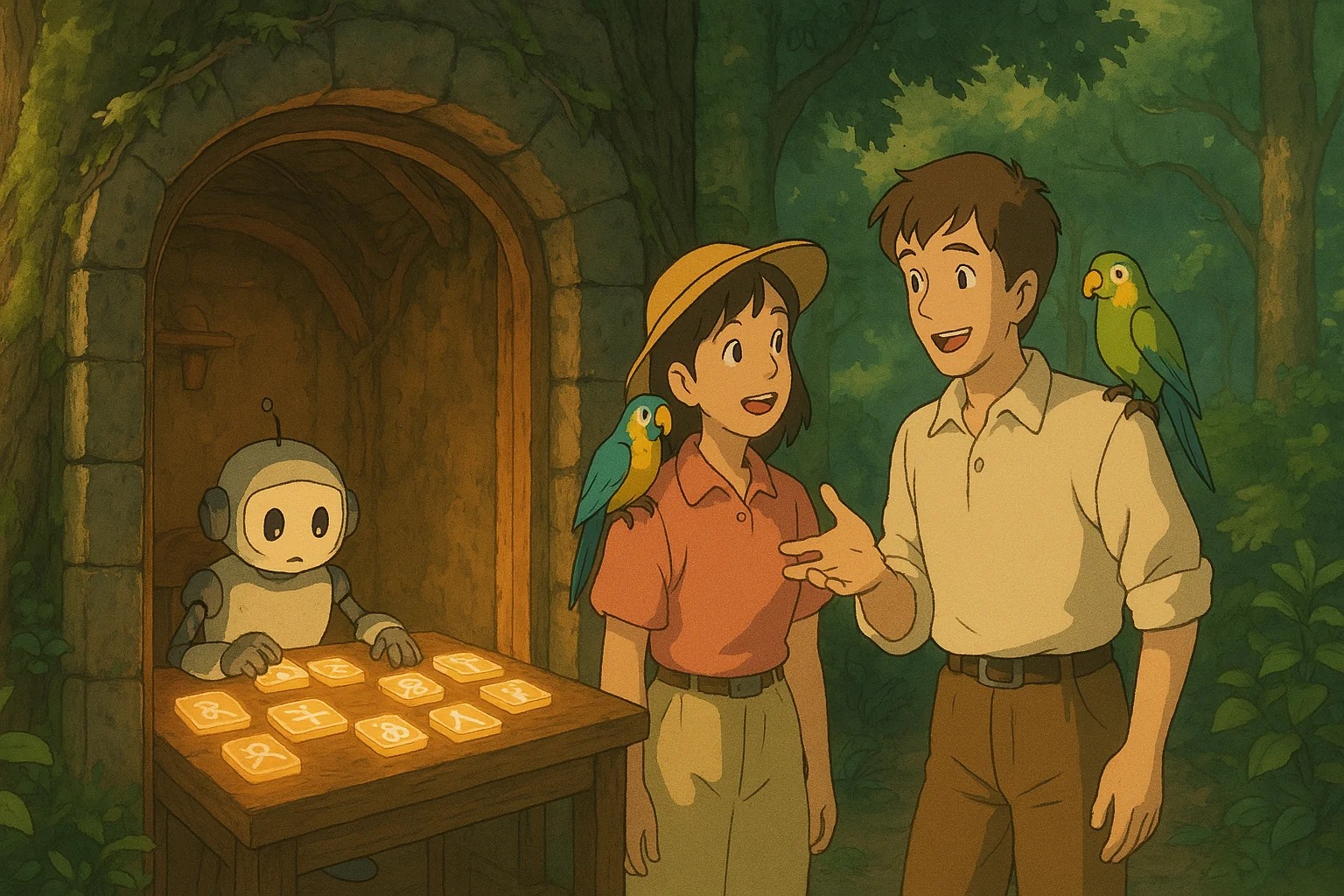

John Searle, a philosophy guy from back in 1980, devised an odd but compelling thought experiment. The Chinese Room is its name. Consider someone imprisoned in a room with a massive rulebook. Though they can follow the guidelines to match symbols and give back flawless Chinese responses, they cannot understand Chinese. From the outside, they seem to know. but inside? Not at all clear. Just symbol-shuffling. Searle’s point is A machine may seem intelligent, but that does not mean it really gets anything.

Imagine now fast forward to today. ChatGPT, Claude, Gemini—all these massive artificial intelligence models taught online—are here. Rumours have it that GPT-4 has 1.8 trillion parameters by itself. These folks can pass the bar exam, write essays, debug programming, even crack jokes. 2 years ago adopting this tech over 92% of Fortune 500 organizations (OpenAI, 2023). I guess it is 100% now. The twist is that under the hood it still just follows patterns. Project the word that comes next. The second comes next. The second comes next. Though still syntactic, not semantic, it is really excellent. Much like the Chinese Room is.

Why therefore does it feel different? Because we have piled a lot of brilliant material atop. RLHF (reinforcement learning from human feedback), or fancy terminology for providing feedback till it behaves better, prompt engineering, memory tricks, even tool utilization like search and calculators. These features give the model a seeming intelligence far higher than its actual level. Still, the central engine is Still that same “guess the next token” device. Fast, elegant but still in the room with the rulebook.

LLMs are just one brilliant predictive algorithm powered by trillions of tokens of human knowledge with a lot of ‘tuning’ on top. Beats many humans in many areas; that’s totally true.

And the crucial issue is: does it matter? Do you care if an LLM understands if it might help you translate papers in seconds, code faster, or write better emails? Not exactly. Perhaps in the real world “looking smart” is enough. If we are to rely on artificial intelligence for major judgments, we must learn to tell the difference between real intelligence and a mechanical piano hooked up to the Internet.

Practice

Let’s ditch the buzzwords and get practical. If you’re a translator, editor, or localization project manager, chances are you’ve seen machine translation improve radically over the last few years.

Let me show you — not with vague theory, but real multilingual examples and approximate numbers behind the scenes.

1. Words Are Numbers Now. Literally.

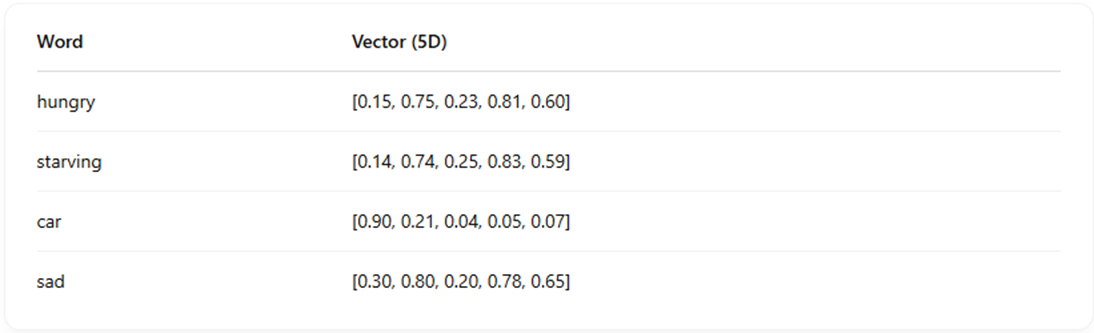

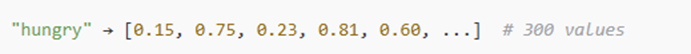

In AI models, words aren’t stored as strings. They’re stored as vectors — long lists of numbers that capture the meaning of a word in context.

Here’s a simplified example (real vectors have 300–1,000 dimensions, but let’s use just 5 for clarity):

Notice how hungry and starving are close in vector space — because they often appear in similar contexts.

2. Translating Pinocchio: Italian → English Using Vectors

Let’s take a sentence from Pinocchio:

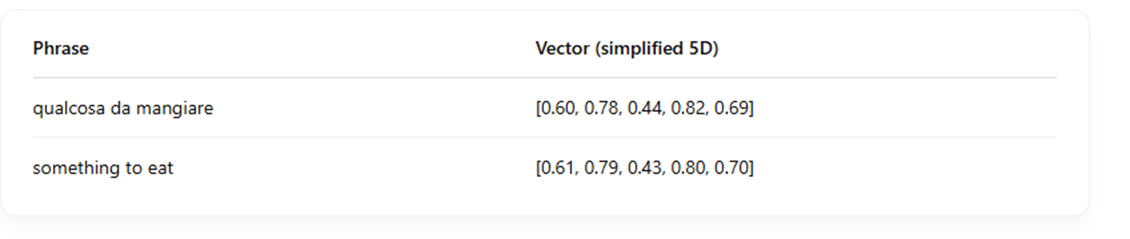

Italian: “Ma il povero Pinocchio, che aveva sempre fame, chiese che gli dessero qualcosa da mangiare.”

English: “But poor Pinocchio, who was always hungry, asked them to give him something to eat.”

“qualcosa da mangiare” ≈ “something to eat”

The model doesn’t look this up in a dictionary. It learns from training data that:

These vectors are extremely close, so the AI confidently selects “something to eat” — even if it’s never seen this exact sentence before.

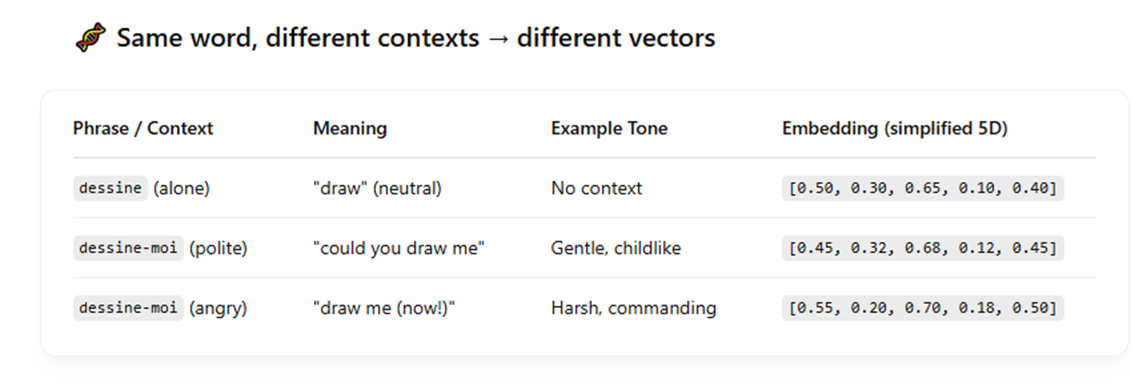

3. How Context Changes Everything: French Example

“Dessine-moi un mouton.” Literal: “Draw me a sheep.” But in tone? — “Could you draw me a sheep?”

While “dessine” means “draw,” it can be interpreted very differently depending on the tone, audience, and context — something transformer models handle via contextual embeddings.

In older models (like word2vec), “dessine” would always have the same vector. In transformer-based models (like GPT), the embedding is recalculated per context.

The transformer model sees the whole sentence and assigns a custom vector to “dessine-moi” based on:

That’s what makes LLMs feel more natural: the meaning isn’t just in the word, it’s in the relationship between words.

4. German Word Order: Grimm’s Fairy Tales

“Dann legte er sich in ihr Bett.” Literal: “Then he lay down in her bed.”

AI models store chunks of phrases (collocations), not just isolated words.

PhraseVector (5D)legte sich (reflexive verb)[0.52, 0.65, 0.33, 0.70, 0.60]lay down[0.51, 0.64, 0.32, 0.71, 0.61]

They’re almost identical. So even if legte sich is rare in isolation, the model knows it matches “lay down” in this context.

5. Rare Words? Subword Embeddings to the Rescue

“Desfacer entuertos” (archaic) ≈ “right wrongs”

Even if “desfacer” is rare, the AI splits it:

-

-facer → [0.7, 0.5, 0.4, 0.3, 0.4]

-

Combined vector → [0.9, 0.8, 0.5, 0.3, 0.5] ≈ to undo

This is what makes AI so good at morphologically rich languages — even weird, invented, or poetic words can be translated thanks to subword training.

6. The tricky part.

Are AI “dimensions” like human dimensions (formality, tone, sarcasm)?

When we say word embeddings are in “300 dimensions” — like:

— we don’t mean those dimensions correspond to anything like:

They’re not labeled. Not interpretable. Not human.

These are latent dimensions, learned by the model as mathematical patterns that optimize performance on a translation task. Some might correlate with aspects like formality, but:

They are emergent, not designed. ♂️ We don’t really know what each number means. The AI can’t explain its reasoning.

More explanations on this topic in the next article to be published soon! I am not a native speaker of any of the languages above so if you would like to fine-tune examples, you are welcome!